ernanhughes

SMOTE

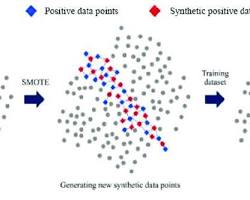

SMOTE (Synthetic Minority Over-sampling Technique) is a popular technique in machine learning used to address the issue of class imbalance in datasets. Class imbalance occurs when one class is significantly underrepresented compared to others. This can lead to poor performance of machine learning models, as they may become biased towards the majority class.

Here’s a detailed explanation of SMOTE:

-

Purpose: SMOTE is used to balance the class distribution by increasing the number of minority class samples in the dataset.

- How It Works:

- Selection of Minority Class Samples: SMOTE selects samples from the minority class.

- Creating Synthetic Samples: For each selected minority class sample, SMOTE finds its k nearest neighbors (typically k=5) within the same class. It then randomly selects one of these neighbors.

- Interpolation: A synthetic sample is generated by interpolating between the original sample and the selected neighbor. This means that a new sample is created at a randomly chosen point on the line segment joining the original sample and its neighbor.

- Repeat: This process is repeated until the desired level of balance between the classes is achieved.

- Advantages:

- Mitigates Overfitting: Unlike simple over-sampling (replicating minority class samples), SMOTE reduces the risk of overfitting by creating new, synthetic samples rather than duplicating existing ones.

- Improves Model Performance: By balancing the class distribution, SMOTE can help improve the performance of classifiers, especially those sensitive to class imbalance.

- Disadvantages:

- Synthetic Data May Not Be Perfect: The synthetic samples are linear combinations of existing ones and may not fully capture the underlying distribution of the minority class.

- Complexity: SMOTE can increase the complexity of the data and the computational cost of training models, especially with large datasets.

- Usage:

- SMOTE is commonly used in classification problems where the class imbalance can lead to poor model performance.

- It is implemented in various machine learning libraries, such as

imbalanced-learnin Python.

Here’s a simple example of how SMOTE can be used in Python with the imbalanced-learn library:

from imblearn.over_sampling import SMOTE

from sklearn.model_selection import train_test_split

from sklearn.datasets import make_classification

# Generate a synthetic imbalanced dataset

X, y = make_classification(n_samples=1000, n_features=20, n_informative=2, n_redundant=10,

n_clusters_per_class=1, weights=[0.1, 0.9], flip_y=0, random_state=1)

# Split the dataset into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# Apply SMOTE to the training data

smote = SMOTE(random_state=42)

X_train_resampled, y_train_resampled = smote.fit_resample(X_train, y_train)

# Now X_train_resampled and y_train_resampled are balanced and can be used to train a classifier

In this example, make_classification is used to generate a synthetic imbalanced dataset, and SMOTE is applied to balance the training data before training a machine learning model.

SMOTE: Synthetic Minority Over-sampling Technique

SMOTE is a technique used in machine learning to address the issue of imbalanced datasets. This occurs when one class in your dataset is significantly outnumbered by another.

How does SMOTE work?

- Identify the minority class: Determine which class has fewer instances.

- Select a minority class instance: Randomly choose a data point from the minority class.

- Find nearest neighbors: Identify the k-nearest neighbors of the selected instance.

- Create synthetic instances: For each neighbor, generate a new data point along the line segment connecting the original instance and its neighbor. This creates synthetic instances of the minority class.

By creating new synthetic instances of the minority class, SMOTE helps to balance the dataset and improve the performance of machine learning models.

Why is it important?

Imbalanced datasets can lead to biased models that perform poorly on the minority class. SMOTE helps to mitigate this by increasing the number of instances in the underrepresented class.

Example

Imagine a dataset predicting fraudulent transactions. Most transactions are legitimate (majority class), while fraudulent ones are rare (minority class). SMOTE would create synthetic instances of fraudulent transactions to balance the dataset, improving the model’s ability to detect fraud.

Important considerations

- Overfitting: Excessive use of SMOTE can lead to overfitting, where the model becomes too specialized in the synthetic data and performs poorly on new, unseen data.

- Choice of k: The number of nearest neighbors (k) can impact the quality of the synthetic instances. Experiment with different values to find the optimal setting.

- Other techniques: SMOTE is not the only approach to handling imbalanced data. Other techniques like under-sampling, class weighting, and ensemble methods can also be effective.

Would you like to see a code example of how to implement SMOTE in Python using a library like imbalanced-learn?